Through hands-on collaboration with experienced mentors, the SONiC Mentorship Program empowers contributors to address real-world challenges and advance open networking technologies.

Through hands-on collaboration with experienced mentors, the SONiC Mentorship Program empowers contributors to address real-world challenges and advance open networking technologies.

In this spotlight, we speak with Gaurav Nagesh, a graduate student from Illinois Tech, about his work on improving Redis performance and stability across SONiC platform daemons, helping make the system more efficient, resilient, and scalable under load.

About the Mentee

I am Gaurav Nagesh, a graduate student from Illinois Tech with a master’s degree in CS and a specialization in Distributed Systems. One of the biggest motive for applying to the SONiC Mentorship Program was seeing how SONiC is actually used in production by so many companies in massive data centers. The scale at which it runs, the adoption rate, the way the community actively contributes to it and its acceptance in the industry even beyond hyperscalers, these things stood out to me immediately.

Reading that SONiC would be like the “Linux of NOS” according to a Gartner report, made it even more exciting. Moreover, getting an opportunity to be mentored by experienced industry engineers and learn directly from people who actively work on SONiC felt like a huge bonus. Finally, the idea that I could be part of a project that powers so many real-world data centers and make an impact, felt like a rare opportunity which could not be missed.

Another reason this project caught my attention is because it aligned perfectly with my background. I’ve worked on benchmarking and profiling systems to improve performance, so the nature of this task felt like a great fit. On top of that, my brief experience with SDN controllers and programmable networks made sure I had a solid foundation in open-source networking to understand SONiC better and dive deep into the project.

Q: What project did you work on, and why is it important to SONiC?

The project I worked on was titled “Enhancing Redis Access Efficiency and Robustness in SONiC.”

When we talk about Redis performance optimization in SONiC, there are usually three major areas to look at: optimizing the application code and how it interacts with Redis, improving the Redis client library itself, or tuning the Redis server for the specific workload. In this project, my focus was on the first part, optimizing things from the application-code perspective and improving how the daemons talk to Redis.

The main idea of the project was to analyze how SONiC’s critical services and platform daemons interact with Redis, understand where unnecessary Redis traffic was coming from, and improve both the efficiency and stability of these interactions.

This meant profiling the current Redis access patterns, setting up baseline load tests, and benchmarking behavior across different hardware platforms to see how Redis performed under varying workloads.

Overall, the project aimed to reduce redundant operations, avoid long-latency interactions, and make sure the system remained responsive even under load.

The main goals were:

- Analyze the existing Redis access patterns across SONiC’s critical services and platform daemons

- Identify and eliminate unnecessary Redis operations, especially repeated GET/SET patterns

- Improve responsiveness under load by reducing long-latency Redis interactions

- Benchmark and compare key metrics before and after optimization

- Add stronger exception-handling paths to prevent critical daemons from crashing

Q: What were your main technical contributions?

During the mentorship, I focused on one of the key subsystems in SONiC — the sonic-platform-daemons — and more specifically on some of its most critical processes: pcied, psud, thermalctld, ledd, and parts of the Redis client library. These components involve both Python and C++ code, so the work spanned across both languages.

The first step was to understand how each of these daemons was interacting with Redis. I approached this in two ways. One was profiling the code using tools like py-spy to generate flamegraphs and identify hot paths—especially around Redis operations. The second approach was tracing every Redis operation end-to-end: which Redis table was being accessed, what command was executed, how long it took, what data was written or read, and the size of the payload. With this, I was able to collect key metrics such as total Redis operations performed, latency and throughput per table and per operation, time-series latency graphs, and CPU/memory usage.

To make this tracing possible, I wrote a custom tool, a Python module that automatically intercepts Redis operations from the target daemons. It works across multi-process and multi-threaded programs, requires almost no code changes, and can be toggled using an environment variable in the supervisord configuration file.

After analyzing all this data, I identified major areas of improvement and implemented them. Key contributions included:

- Conditional writes with change detection: The daemons were updating all attributes every cycle, even when nothing changed. I added logic to track previous states and write to Redis only when values actually changed (e.g., model number, serial number, revision, thresholds, LED states, etc.). This cuts down a large amount of unnecessary Redis traffic.

- Better Redis connection management: Many daemons were opening separate Redis connections for each table they wrote to. Since most of them are single-threaded and operations happen sequentially, this was wasteful. I consolidated this into a single connection reused for all writes across different tables on the same logic database (Eg STATE_DB, which is db6 in Redis)

- Batching Redis operations using Pipeline: Instead of making individual set() calls for every attribute, I modified the logic to batch them using Redis Pipeline. This significantly improves throughput and reduces network overhead.

- Misc bug fixes: Along the way, I also made small bug fixes in swss-mgmt and platform-daemons wherever I found issues while testing and reading the codebase which have already been merged.

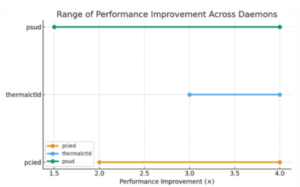

After implementing the changes and benchmarking it to get the metrics, we see a significant improvement in the throughput and the over amount of time taken to execute the operations reduces. The performance improvement values may vary slightly in practice due to the dynamic nature of the daemons and external factors like current system load on Redis, background processes, hardware conditions and system resource availability. So, a certain variance is expected in these results. Accounting for the variance from multiple benchmarking runs, the graph below shows the range of performance improvement when compared to baseline across three daemons: pcied, thermalctld and psud.

Here are the draft pull requests implementing the changes:

- pcied: Redis Performance Improvements

- psud: Redis Performance Improvements

- thermalctld: Redis Performance Improvements

Q: What challenges did you face, and what did you learn?

One of the main challenges I faced during the project was that most of my work targeted the pmon container, and many of the platform daemons inside it are platform-dependent. Because of this, developing and testing everything on the virtual testbed wasn’t always straightforward. Still, I tried to be as careful and disciplined as possible while writing and testing my changes in the virtual environment. For the actual benchmarking and platform-specific validation, my mentor helped by running the tests on physical hardware, which made the entire process much smoother.

On the technical side, I got to learn a lot of interesting things about SONiC’s architecture. For example, SONiC uses a microservice-style design, but unlike typical microservices where you run one service/process per container, here a single container runs multiple services/processes, all managed by supervisord. Another interesting detail was understanding how Redis is deployed: on single-ASIC devices, Redis runs in the host’s network namespace, but on multi-ASIC devices, you also have separate Redis instances running in different network namespaces—one per ASIC. These kinds of unique architectural decisions and the reasoning behind them were really insightful to learn.

Moreover, I also got the chance to collaborate and present my work to a small SONiC team at Microsoft, and I received some great feedback that helped me refine the final changes.

I also spent a lot of time exploring different SONiC repositories to understand how everything fits together and how the whole system operates end-to-end. Reading open-source code written by engineers from top companies gave me a good sense of how things are structured in large real-world systems, why certain design decisions were made, and what alternative approaches could have been. It helped me spot patterns, best practices, and common styles used across the project. It also broadened my understanding of SONiC from an end-user perspective—its features, use cases, and how the various pieces interact.

Q: What impact has this mentorship had, and what are your next steps?

The main impact of this project is that it brings an overall improvement in Redis performance within the platform subsystem and adds more stability to how these daemons interact with the database. It essentially makes Redis access lighter, more predictable and more resilient under load. Along with that, the work also contributed to reducing unnecessary pressure on system resources. Some of the key improvements include:

- Reduced unnecessary memory allocations by re-using objects and avoiding duplicated or redundant resources.

- Increased throughput, Reduced I/O and network overhead by:

- Reusing the same Redis connection for pipeline operations instead of creating separate connections for each table

- Batching operations using Redis Pipeline instead of sending individual commands

- Reduced Redis traffic (effectively increasing available bandwidth for other traffic in the system) by using conditional writes—only updating Redis when a change in table fields is actually detected.

Looking forward, as I mentioned earlier, while working across different components I’ve already been able to catch and fix a few important bugs and I plan to continue doing that as I explore more of SONiC. I want to extend this optimization work to other daemons across different subsystems, understand more internals and keep improving things wherever I can. My goal is to stay actively involved and grow as a contributor, and I genuinely hope to be a long-term contributor to SONiC, continuing to learn, experiment, and build as the project evolves.

Q: Is there anyone you’d like to acknowledge?

First and foremost, I want to thank my mentor, Vasundhara Volam. None of this would have been possible without her. I’m extremely grateful for the opportunity she gave me and for all the time she took out of her busy schedule to mentor me. Ma’am has been incredibly understanding, flexible, supportive and very patient throughout the entire journey. She guided me with a structured approach, helped me learn things the right way. I genuinely couldn’t have asked for a better mentor.

I am also thankful to the extended team members, Rita Hui and Judy Joseph. Rita was very supportive and encouraging, and I really appreciate her consideration for enabling this opportunity within her team. Judy provided valuable evaluation, guidance and quick review cycles, which helped me move forward efficiently.

A big thank you to the LFX team — especially Evan Harrison and Tracey Li. They oversaw and managed the entire program end to end, ensured the onboarding and logistics were smooth and were extremely patient throughout the process. I had very long email chains with them because of uncertainties around my visa situation and they were always understanding, calm and positive. A special mention to Sriji Ammanath from the LFX HR team for kindly accommodating multiple requests due to my university policy constraints.

Thanks as well to the other mentors in this program. I appreciate the time and effort they put into supporting their mentees and sharing their experience throughout the program. I also want to thank the reviewers and project maintainers who took the time to review my PRs, provide feedback, and guide the merge process. Their input helped me refine my contributions and understand SONiC’s workflows better.

Get Involved

Interested in contributing to SONiC? Join the community and get involved through wiki, mailing lists, and working groups.